Since "Normal Maps" are "Non Color" data, I expect them to be kept linear when saving. What does "Linear Color Space" mean and how can I ensure that it doesn't get any gamma conversion when beeing saved? The "Exposure and Gamma" setting in Photoshop is also confusing me.

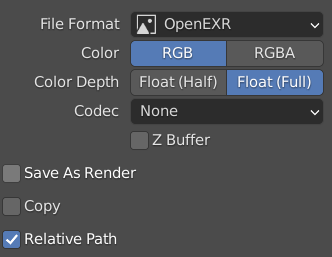

They should be saved correctly! Just be sure that 'Save as Render' is not checked while saving so that no color management or gamma conversion will be applied.

Photoshop, on the other hand, is a bit different. I'm not sure exactly how it works, but leaving the exposure at 0 and the gamma at +1 will keep it from messing things up.

Here's the important bit: If the image was baked as sRGB, import it as non-color but if the image was baked as linear (like all 32 bit images in Blender are) then import it as sRGB, whether processing it through Photoshop or not. What we don't want is to do the sRGB correction twice or skip it entirely.

The term "linear" is confusing me in this context since I'm always thinking at a "Linear Workflow" as working with the raw image data without any gamma correction. Now, when I read that importing a "Normal Map" baked in "Linear Color Space" needs the setting "sRGB" this implies for me an internal undoing of the gamma correction since Blender uses under the hood the "Linear Workflow" for its calculations while the display can use the gamma corrected image directly. Unchecking the "Save As Render" option is a point where I can follow again.

Can I say it like this (?): sRGB is just a representation for describing colors (but not for all colors possible) and not linked to any gamma correction. Converting something to sRGB means squeezing the color space of an image so that colors being outside of sRGB are shifted into this color space.

The Blender image texture import setting "sRGB" on the other hand assumes that this image is also gamma corrected so that Blender converts it for its internal "Linear Workflow" calculations. In other words: Blender associates sRGB with gamma correction when importing image textures allthough sRGB and gamma correction are not linked with each other.

I can highly recommend reading this series of articles by Troy Sobotka:

And if you haven't seen this yet, a brilliant Bartek Skorupa at the Blender Conference (Color Management for humans):

https://www.youtube.com/watch?v=kVKnhJN-BrQ

Both not a direct answer to your question but extremely helpful in understanding what is going on with the extremely complicated thing, so loosely called 'color'.

Thanks, ![]() spikeyxxx, for the links. The definition of "color" and the implementation of its adjustment in Blender is really a lot more complicated than it seems at first sight. And it's really important to find a correct explanation. You as well as the team of CGCookie and of course Bartek Skorupa are really among the top addresses for this.

spikeyxxx, for the links. The definition of "color" and the implementation of its adjustment in Blender is really a lot more complicated than it seems at first sight. And it's really important to find a correct explanation. You as well as the team of CGCookie and of course Bartek Skorupa are really among the top addresses for this.

The definition of "color" and the implementation of its adjustment in Blender is really a lot more complicated than it seems at first sight

Absolutely! There are very few people that totally grasp how it works, and I would not yet put my self in that category.

Converting something to sRGB means squeezing the color space of an image so that colors being outside of sRGB are shifted into this color space.

Yes! That's exactly it.

For normal maps specifically, think about it this way: the Normal Map node needs to use an image that's been converted to sRGB. If it's baked in sRGB, you wouldn't want to apply the conversion again when importing, so we set the node to non-color data. If it's baked in linear, we haven't yet converted it to sRGB, so we set the node to sRGB and the Normal Map node gets what it expects. The transform should only happen one time throughout the entire process - either during the baking process itself or when importing.

I'd highly recommend reading through the series Spikey linked to above. It's very helpful! I haven't gotten all the way through it yet but it's quite good so far.

So, the sRGB setting has nothing to do with gamma correction? How does Blender then know wheter it has to undo a gamma correction for internal linear calculations?

Ah whoops, missed that part of the question above. As far as I know, the gamma correction is just one part of the conversion from one color space to another. Blender isn't smart or 'undoing' anything - it either applies a transformation to the source before using it or it doesn't.

@jlampel I've taken another look into the "Blender 2.90 Manual" concerning the "Color Management" here:

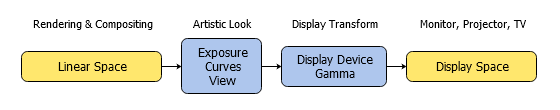

I think that the first setting in the "Color Management" panel only does a gamma correction for a certain device which is per default an sRGB display. Squeezing colors into a color space that would otherwise simply be clipped is part of the "View Transform" setting which is per default "Filmic" that doesn't only convert colors into sRGB but also gives them a look as if they had been taken on a real film:

"There is also an artistic choice to be made. Partially that is because display devices cannot display the full spectrum of colors and only have limited brightness, so we can squeeze the colors to fit in the gamut of the device. Besides that, it can also be useful to give the renders a particular look, e.g. as if they have been printed on real camera film. The default Filmic transform does this."

Conversion from linear to display device space.

If you don't want any display gamma correction, set "Display Device" to "None. Unchecking the "Save as Render" option in the "Save as" dialogue only disables the transformations from "View Transform" down to "Gamma" in the "Color Management" panel. The "Gamma" setting in that same panel is an additional gamma conversion on top of the gamma correction for a certain device and is only done for artistic reasons:

" Gamma

Extra gamma correction applied after color space conversion. Note that the default display transforms already perform the appropriate conversion, so this mainly acts as an additional effect for artistic tweaks."

I think, the term "Color Space Conversion" can be confusing because the question is what is part of that conversion: Does the conversion only mean a gamma correction or does it also mean that colors are shifted for making them visible on a certain device so that for example areas that would otherwise be overexposed show after that color shifting all the details in that part of your scene. Once again: The "Display Device" setting in the "Color Management" panel in my opinion only applies a gamma correction for sRGB devices per default and no color shifting as done under "View Transform" with the "Filmic" setting.

If you load an image texture with "sRGB" setting, I suppose it only an inverse gamma so that Blender can calculate with the original color value. The "Blender Manual" unfortunately doesn't explicitely mention here what the color space conversion exactly does. If there should be a difference between "Non Color Data" and "Linear Space" then I would be happy to get an inside look on what is exactly happening. I suppose that "Non Color Data" is already in "Linear Space" what seems to be expressed in my opinion in this diagram from the "Color Management" chapter of the "Blender 2.90 Manual":

An example of a linear workflow.

The double arrow and the missing color circle between "Non-Color Data" and "Linear Space" seems to indicate that there is no conversion at all. Nevertheless, I'm wondering what's the effect of the 4 different "Color Space" settings for "Image Textures" reaching in the dropdown menu from "Linear" to "Raw"

If there were someone familiar with Blender's source who could give a precise explanation of what happens under the hood, that would help to remove the confusion.

If there were someone familiar with Blender's source who could give a precise explanation of what happens under the hood, that would help to remove the confusion.

Yeah! I don't know enough about the details here to answer exactly. You could try asking the folks over at https://devtalk.blender.org/ or https://blender.stackexchange.com/

Troy messaged me about this thread and filled me in on more details. To better answer your original question:

Since "Normal Maps" are "Non Color" data, I expect them to be kept linear when saving. What does "Linear Color Space" mean and how can I ensure that it doesn't get any gamma conversion when being saved?

He said:

Normal maps should *not* be linear, as it implies linear *colour*. They should be flagged as data! They do not represent colours, but rather data.

And to answer the second part of the question, when OCIO finds a data transform, under V1, it keeps the buffer out of the management chain entirely. On the other hand, if it were set to “Linear Colour”, it would assume the ratios in the buffer are in fact light / reflectance colour ratios, and in fact keep them *in* the management chain. Hope that helps with some clarity.

Always ask yourself “Does this buffer indicate an emission or a reflectance?” If your answer is “No”, non color data is imperative to set.

Does that help answer the above question?

![]() spikeyxxx The term "gamma" is obviously used in a variety of contexts in mathematics and science as can be seen for example here (probably not a complete list and not the best source but just for demonstration purposes). Therefore a proper definition is crucial. In terms of image processing and manipulation, I understand "gamma" as the manipulation of pixel brightness (I know this term also needs proper defintion in order to dinstinguish it to "Value", "Luminosity", "Luminance" etc.) which not only reflects the characteristic response of early cathode ray tube (CRT) monitors to input voltages (non-linear) but also the sensitivity of the human eye to slight differences in brigthness which is better in the lower range of brightness values (compare a small candle light in a dark room and the same light in broad dayligth on a sunny day) than in the higher range. Therefore it makes sense to bring pixels with slight brightness differences in the higher range of brightness values to the same brightness value so that the image can be more compressed in that range which leaves memory space the darke pixels so that slight brightness differences can be saved in the file which our eyes are able to perceive.

spikeyxxx The term "gamma" is obviously used in a variety of contexts in mathematics and science as can be seen for example here (probably not a complete list and not the best source but just for demonstration purposes). Therefore a proper definition is crucial. In terms of image processing and manipulation, I understand "gamma" as the manipulation of pixel brightness (I know this term also needs proper defintion in order to dinstinguish it to "Value", "Luminosity", "Luminance" etc.) which not only reflects the characteristic response of early cathode ray tube (CRT) monitors to input voltages (non-linear) but also the sensitivity of the human eye to slight differences in brigthness which is better in the lower range of brightness values (compare a small candle light in a dark room and the same light in broad dayligth on a sunny day) than in the higher range. Therefore it makes sense to bring pixels with slight brightness differences in the higher range of brightness values to the same brightness value so that the image can be more compressed in that range which leaves memory space the darke pixels so that slight brightness differences can be saved in the file which our eyes are able to perceive.

The question for me in the context of saving image files with Blender's "Color Management" is, whether the conversion for a certain output device (in most cases probably an sRGB monitor) only involves a gamma correction or more. The "Blender Manual" defines the "Color Space" in its "Glossary" here:

- Color Space

A coordinate system in which a vector represent a color value. By doing so, the color space defines three things:

The exact color of each of the Primaries

The White Point

A transfer function

And "sRGB" directly below this:

- sRGB

A color space that uses the Rec .709 Primaries and white point but, with a slightly different transfer function.

And "Primaries" are defined in the "Blender Manual" here as follows:

- Primaries

In color theory, primaries (often known as primary colors) are the abstract lights, using an absolute model, that make up a Color Space.

Therefore, I suppose that if you set "sRGB" as "Output Device" and don't check "Save as Render" when saving a rendered image, Blender will only calculate the necessary "Gamma" and take care of that "White" appears as "White" on an "sRGB Device". I don't know what this "Transfer Function" is and in how far it's taken into account. What Blender probably does not when converting an image for an "Output Device" is the "squeezing" of the colors of a calculated image in order to avoid clamping so that pixels with different very bright colors won't be displayed as structureless pure white areas.

I'm especially thinking at Blender Guru's video "The Secret Ingredient of Photrealism" here and the comparison of a rendering with "View Transform" set to "Default sRGB" and to "Filmic" in Blender 2.78 at 21:39. Also see in this video from 8:51 on what Blender Guru tells about the connection between "Color Management" and "Dynamic Range".

Thanks, @jlampel, the first and third comment by Troy are really helpful, the second one is rather technical and cryptic to me. The big question is always whether Blender treats my texture in a way that doesn't corrupt it for its later use. So, as Troy said, a "Normal Map" should be treated as "Data" and not be transformed in any way. I would therefore set the setting for the "Output Device" in the "Color Management" to "None", save the file with "Save as Render" unchecked so that no "View Transformations" are executed and would then load the file as "Non Color Data" .

I think that's overcomplicating it slightly - changing the Display Device doesn't change anything about the image itself but only how blender sends it to the display. Saving it with sRGB, XYZ, or None set makes no difference. Beyond that, unchecking Save as Render (should be off by default for bakes) and loading it as Non-Color is spot on 👍